In Oracle Cloud Infrastructure (OCI), the "hub and spoke" architecture is a common networking design used to

connect multiple Virtual Cloud Networks (VCNs) in a centralized manner. This

architecture is often implemented using Dynamic Routing Gateway (DRG)

attachments and Transit Routing. Let me break down how it works:

Hub VCN: In the hub-and-spoke model, one VCN serves as the central hub where

shared services or resources are located. This hub VCN typically hosts core

services such as DNS, Active Directory, or shared databases.

Spoke VCNs: Spoke VCNs are additional VCNs that are connected to the hub VCN.

Each spoke VCN usually contains resources specific to a particular department,

application, or environment.

Dynamic Routing Gateway (DRG): The DRG serves as the central routing hub for

connecting the hub VCN to other VCNs or on-premises networks. It facilitates

the exchange of routing information between the hub VCN and the spoke VCNs.

DRG Attachments: Each spoke VCN is connected to the hub VCN via a DRG

attachment. A DRG attachment establishes a logical link between the spoke VCN

and the DRG, enabling traffic to flow between them.

Transit Routing: Transit Routing is a feature in OCI that allows you to route

traffic between different VCNs using the hub-and-spoke architecture. With

Transit Routing, you can configure route tables in the hub VCN to direct

traffic between spoke VCNs through the hub VCN.

Route Tables: Each VCN has its own route table that determines how traffic is

routed within the VCN. In the hub-and-spoke model, route tables in the hub VCN

are configured to route traffic between spoke VCNs.

Security: Security within the hub-and-spoke architecture is typically managed

using Security Lists, Network Security Groups (NSGs), and other OCI security

features. Access control can be enforced at the subnet level to restrict

traffic between different environments.

By implementing a hub-and-spoke architecture using DRG attachments and Transit

Routing in OCI, organizations can achieve centralized network management,

simplified connectivity between VCNs, and improved security and compliance.

This architecture is well-suited for scenarios where multiple VCNs need to

communicate with each other while maintaining isolation and security

boundaries.

Now, the objective of this post is to explain, how leveraging DRG attachments

and VCN Route tables we can make the communication between hub and spoke. And

also, using transit route, how we can enable the resources provisioned inside

spoke VCN to reach the internet using Hub VCN.

Spoke1 VCN:-

Spoke2 VCN:-

The security list at this moment has ingress from spoke1 vcn

cidr to spoke2 and vice versa.

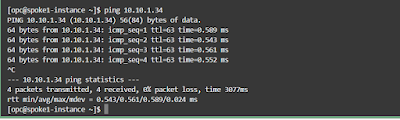

I will now try to ping spoke2 instance from spoke1.

As expected, it is not working

next, i am going to attach the 2 spokes

Now, under the route table for spokes VCN, we are going to add a new route

Ping is now working on both the side of spokes

The next requirement of this post, is that from spoke VCN, we want to reach to internet via Hub VCN. This will be done via transit route.

In the hub machine compute instance, i'll add a secondary vnic in the same subnet as primary vnic. The subnet is of public type and IGW is attached to it.

Under DRG, create anew distribution list for hub-vcn attachment

Create a new DRG route table for hub-vcn

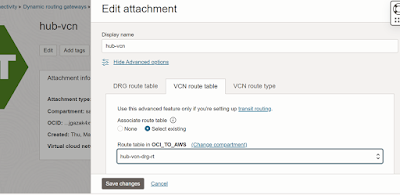

Attach the hub-vcn to DRG

Now create a VCN DRG route table and point it to private IP

address of secondary vnic

Add a security list for this public subnet to receive

request from spoke VCNs.

Security list for the spoke VCN. Add a ingress rule to receive request from hub vcn

Add the transit route to DRG

Now, make the nating on the hub-vcn compute instance

sysctl -w net.ipv4.ip_forward=1

firewall-cmd --permanent --direct --add-rule ipv4 nat

POSTROUTING 0 \

-o enp0s6 -j MASQUERADE

[root@primary-vnic opc]# firewall-cmd --permanent

--zone public --add-interface enp0s6

The interface is under control of NetworkManager,

setting zone to 'public'.

success

[root@primary-vnic opc]# firewall-cmd --permanent

--zone public --add-masquerade

[root@primary-vnic opc]# systemctl restart

firewalld

[root@primary-vnic opc]# firewall-cmd --reload

success

[root@primary-vnic opc]#

sysctl -w net.ipv4.conf.all.rp_filter=2

added the route for secondary vnic

[root@primary-vnic opc]# ip route

default via 192.168.0.1 dev enp0s6

default via 192.168.0.1 dev enp0s6 proto dhcp src

192.168.0.49 metric 100

169.254.0.0/16 dev enp0s6 scope link

169.254.0.0/16 dev enp0s6 proto dhcp scope link src

192.168.0.49 metric 100

192.168.0.0/26 dev enp0s6 proto kernel scope link

src 192.168.0.49

192.168.0.0/26 dev enp1s0 proto kernel scope link

src 192.168.0.57

192.168.0.0/26 dev enp0s6 proto kernel scope link

src 192.168.0.49 metric 100

[root@primary-vnic opc]# ip route add 10.10.0.0/26

via 192.168.0.1 dev enp1s0

10.10.0.0/26 is the CIDR for spoke1 private subnet

Now, if I try to ping google.com from spoke1 vcn

Through this post, i just wanted to show how using IP Masquerading feature we can reach to the internet from the spoke VCN private subnet. Also, how this can be achieved under Hub & Spoke Model. I hope this post will help someone.